How to Conduct A/B Testing on Monetization in Your App

Topics: Guides, Monetization

Updated on November 21, 2024

Before launching, we used to just hope that our guesses about users' desires weren't too far off. Now the app's live, we can test and know for sure.

This article's about A/B testing - an easy-to-use, budget-friendly, and powerful tool for confirming whether what we're about to do will succeed and how it could be done better.

To be more specific, we'll focus on monetization testing - at the end of the day, making money is what a commercial app's all about, right? We aim to guide you through the A/B testing methodology step by step.

Once you've read through this article, you'll be ready for your first A/B test.

What is A/B Testing for App Monetization

A/B testing is when we expose a slice of app users to a potential update - say a modified subscription plan - for a while, and then check if this "B" option brings in more revenue than the old "A" choice.

What's the Point of A/B Tests?

In essence, A/B testing means we don't just launch a new app version immediately. First, we test it to check if users prefer it over the previous one. Plus, by comparing how users react to the two app versions, we're learning about their likes and dislikes.

For example, mobile app A/B testing prior to adjusting the subscription plan helps us in the following ways:

- We don't put the whole user base at risk if the new plan backfires.

- We're picking up valuable info on which parts of the offer make users hit subscribe, renew, or ditch.

How to A/B Test Your App's Revenue-Generating Tactics?

Suppose we've settled on and put into action a way to monetize. But, are you certain you're pulling in the most revenue you can? Let's check it out.

Determine What You Want to Test

Let's break down the factors that might be affecting the revenue.

1. Balance between free content and features behind a paywall

Maybe the app has too many free features, and free version users just have no reason to subscribe. Or the opposite – users might resist subscribing because they can't adequately try the app without cost.

Here're examples of specific questions that might be of practical interest to us:

- If we shift a key free feature behind a paywall for more subscribers, could we lose too many regular users of the free version?

- If we prolong the free trial for higher retention of free users, could this backfire with fewer subscriptions?

2. Subscription offer specifics

Which features are included, the subscription length, and the price - these are elements of the subscription plan that can affect a user's decision. Fine-tuning each to match your audience isn’t straightforward.

Here are a few example questions that could be key in boosting the app's revenue:

- How are added bonuses in a subscription plan valued, do they matter?

- Is it possible to elevate revenue by increasing or, conversely, by lowering the subscription fee?

* Keep in mind, while split testing for pricing with the same plans at different price points isn't illegal, it can frustrate users and bring up ethical issues. So, think about adjusting the features when you test new prices.

- Might only offering an annual subscription turn users off, or would they be willing to accept it, leading to a more stable revenue flow?

3. Ad placement specifics

Timing of ad displays, banner positioning, and rewards for ad engagement, among other similar factors, influence your advertising income.

Some actionable questions we might ask are:

- Is it possible that simply moving the ad to a more attention-grabbing area could improve its results?

- How does changing the time between ads influence user retention?

4. Purchase experience specifics

It could be that a poorly designed interface hinders purchases if users struggle to navigate and find necessary info.

Specifically, our concerns might include:

- Will a more detailed subscription screen engage more users or, conversely, increase the bounce rate?

- Should we relocate the Subscribe button to a spot that's easier to reach?

- How about other interface elements - do they assist in users' decision-making, or cause distraction or confusion?

Decide on your top concern and construct your testing hypothesis: What will we alter and what effect do we expect?

Hypothesis example: If we increase the subscription fee by X dollars, it shouldn't heavily impact the paying user conversion, and our revenue should go up.

Choose an A/B Testing Tool

We will need an A/B testing tool - software that will track user actions and app's revenue and report test results to us.

Here, we're going to look at Google Analytics. Why? It's a standard free tool for tracking user actions in apps, and you've probably already got it. I barely come across a customer who doesn't.

It's equipped with an Experiments feature, which is the simplest way to kick off your first A/B test. We'll only briefly touch on other A/B testing tools for mobile apps and websites, focusing on how they might trump this straightforward choice.

1. Free or Paid A/B Testing Platforms, like VWO, Crazy Egg, etc. Google Analytics makes the list too.

- Pros: Each tool offers distinct features that may just click with your targets.

- Cons: Any external service we plug into an app will inch its speed down a bit.

2. In-built A/B testing feature right inside the app.

- Pros: It's up to us what features to integrate; and this won't impact performance, as the app isn't sending requests externally.

- Cons: Costs - building this feature takes time and will require a bit of financial input.

Figure Out the Number of Users to Test and the Duration Needed

We'll need some stats to make sure the changes in any metric we spotted during A/B testing are actually due to something like extending the trial period, and not just a fluke. The A/B tool handles all the math.

We just need a rough estimate of how many users we should test and for how long before kicking things off. There are online A/B test calculators available for this, and they are free.

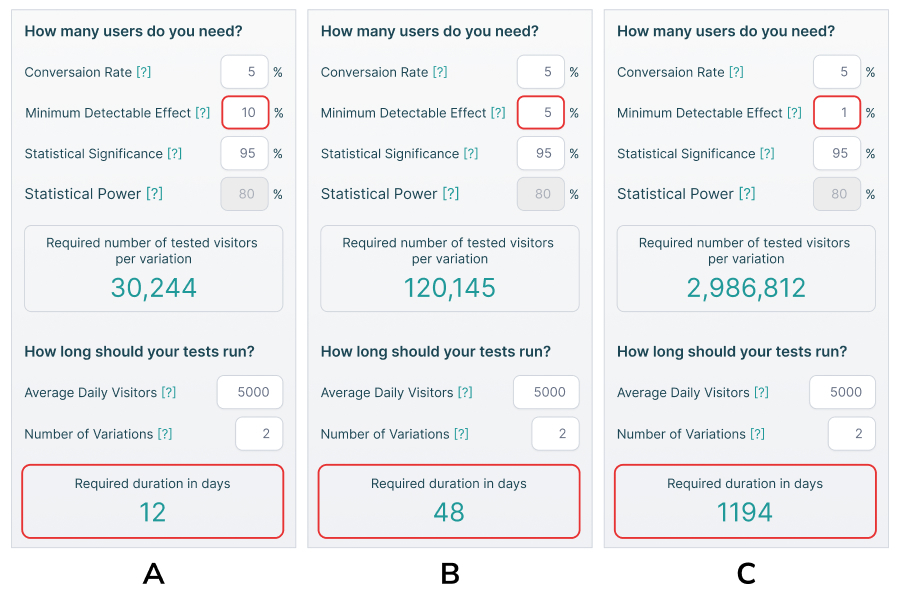

Let's look at the picture - an A/B test calculator appears like this.

Confused? No worries, it's not complicated at all. Let's break down how to use it with a specific example.

Let's assume the scenario: Our app gets 5k daily visitors and the Subscribe button's conversion rate is 5%. We want to A/B test (with 2 app variations: A and B) whether moving the button up will improve conversions.

Here's what we do:

- We enter these three numbers into the fields named "Average Daily Visitors", "Conversion Rate", and "Number of Variations" accordingly.

- In the field named "Statistical Significance", we enter 95% - this is the standard, suggesting there's a 5% likelihood our outcomes are random.

- Just one field left, the "Minimum Detectable Effect." - Here, we should enter the percentage we think our conversions will increase by. And it's this figure that will determine the number of users needed and the length of the test.

- Let's think about it - perhaps, the effect from just moving the button will be rather subtle.

Can it be a 10% surge (that's moving from 5% to 5.5%) as presented in Figure A? If that happens, we'll know for sure in 12 days.

Or could it just be a 5% (from 5% to 5.25%), like in Figure B? Then, it'll take at least 48 days to notice. If we wrap up testing earlier, the report will state that moving the button doesn't impact conversion.

Maybe it's just a 1% (from 5% to 5.05%), like in Figure C? If so, detecting this effect will be impossible unless we're ready to run the test for 1194 days - our user base isn't sufficient.

In this way, we employ an A/B test calculator to determine, based on our basic assumptions:

- Can our hypothesis be tested with the number of visitors we get daily?

- What percentage of the app users do we want to include in the test? If we're dealing with millions of users, we might think about testing just a portion to keep the risk low.

- What's the minimum testing duration required to capture slight effects?

Deal With the Tech Side

You will need app developers' help with two things:

- Integrating an A/B testing tool into the app. - With Google Analytics, it's just a matter of minutes. Integrating another external A/B testing service or crafting a built-in one could take longer, maybe a few days.

- Developing an update - a new version of the app with a new design, modified subscription plans, or whatever we decided to test. - It's going to take just as long as if we were simply rolling out the update.

Configure and Run the A/B Test on Google Analytics

It will take a few minutes. In the Behavior section of Google Analytics, create a new experiment.

Indicate:

- Objective - the metric we’re trying to influence, say the bounce rate on the subscription screen.

- Percentage of users to be part of the test.

- Minimum time the test will run.

- Links for the original version and the tested variation.

That's it!

Analyze Results

Basically, we can end up with three types of results:

- Success - the target metric shifted as anticipated. - Time to celebrate and roll out the update.

- Failure - the target metric's shift is insignificant, but there's a slight trend. - We might consider redoing parts of the update, such as a deeper redesign of the subscription screen for better results.

- Failure - the target metric hardly shifted. - Best to explore why our assumptions fell flat and determine the vital points needing further exploration.

What Are the Costs of Conducting A/B Testing?

- Costs for using an A/B testing tool, if it's not free.

- Costs of integrating an A/B testing tool or developing an built-in one.

- Costs of developing the app update that's being tested.

If the A/B test is a success, you're spending roughly the same as you would for a typical app update. If the test fails, it means you've lost the money invested in crafting the new version. At the same time, though, it's saving you from the risks of launching an unsuccessful update.

Benefits of A/B Testing Monetization Specifics

- Profit increase. - The ultimate goal, but it's a rare catch in the short-term. A modest boost in conversion rates doesn't usually get a chance to translate into a significant profit gain during the test period.

- Better app metrics. - More users starting free trials, subscribing, renewing, clicking ads - and that's a fresh kick for your business.

- Deeper user understanding. - Grasping what connects with them, their motives - such information is priceless for crafting your business strategy.

Conclusions

A/B testing is no longer just a tool - it's a critical component of strategic app development. As user behaviors and technology continue to evolve, staying agile and data-driven is key to sustainable monetization.

Ready with a new app update? Hold up and consider A/B testing over immediate launch. It remains an economical, impactful way to pinpoint what boosts your app's income and make choices backed by facts.

Disclaimer: Monetization strategies should always balance user experience with business objectives.

The mobile monetization landscape is complex and constantly changing. We're always eager to hear about your specific challenges and insights. Contact us to discuss how A/B testing can transform your app's revenue strategy.